Artificial intelligence is changing how we work with data and applications. As AI gets smarter, it needs better ways to connect safely and easily to different data sources and tools.

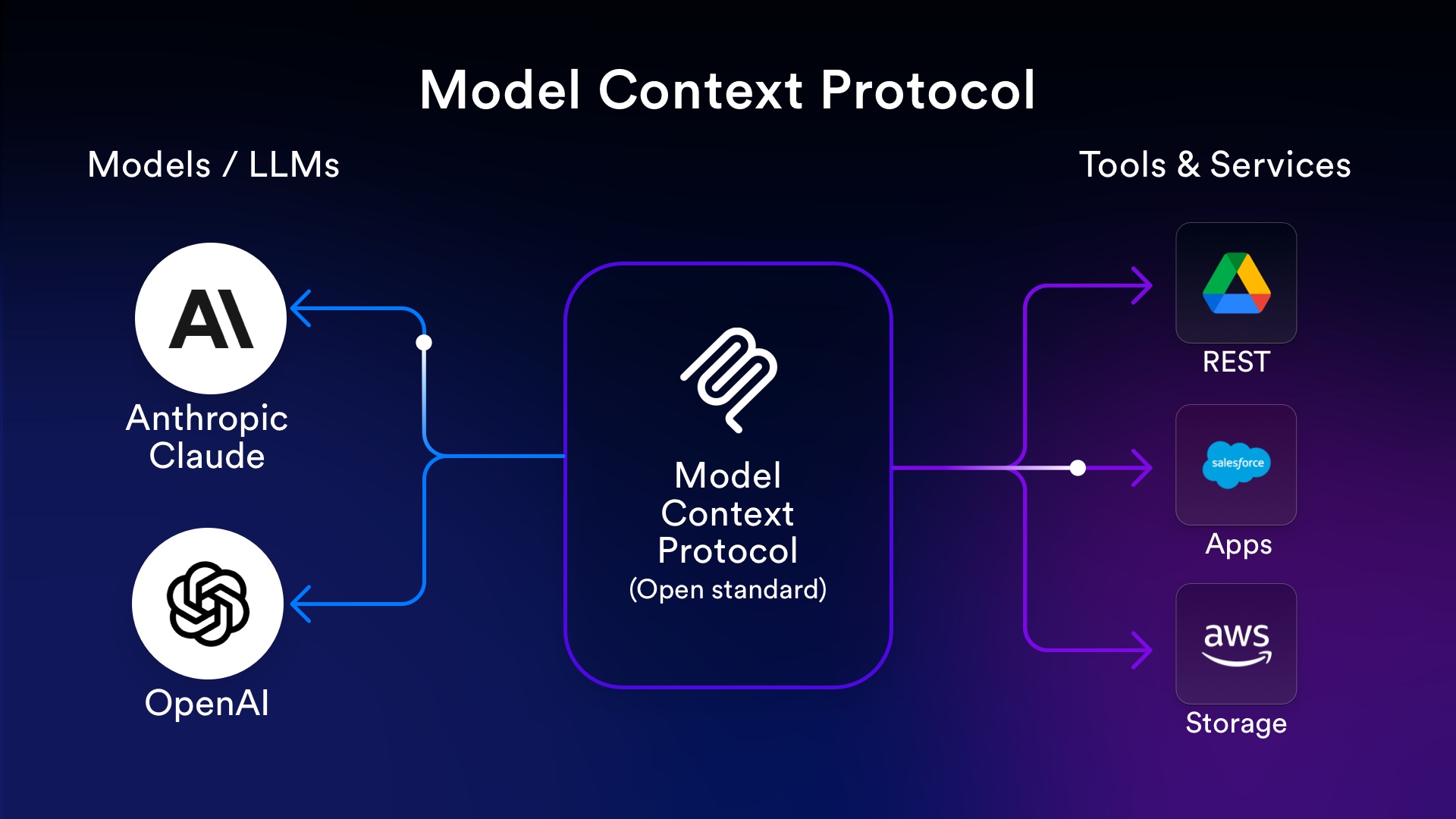

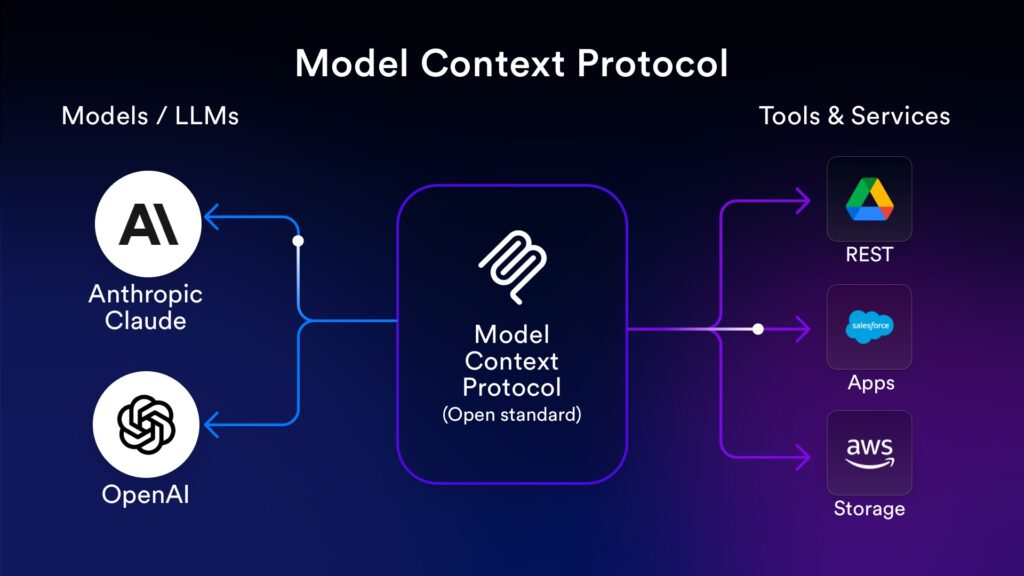

The Model Context Protocol (MCP) is an open standard that helps AI systems share information and collaborate by creating a common way to link data, tools, and models.

MCP acts as a bridge between AI models and outside resources. Developers can use MCP to let their applications provide needed context to AI, or to build AI-powered tools that connect to these apps.

This protocol aims to simplify how AI systems are designed and makes it easier to create secure, flexible integrations. If you’re curious about why some call MCP the “USB-C port” for AI, check out this introduction to Model Context Protocol.

Key takeaways

- MCP lets AI systems connect and share information using a standard method.

- It supports safer and simpler integration with different tools and data.

- Developers can use MCP to build flexible, modular AI-powered applications.

What is the Model Context Protocol (MCP) in AI?

The Model Context Protocol (MCP) lets AI systems access and use external data and tools in a standardised way. MCP makes AI models more flexible, so they can interact with a wider range of services beyond what they were originally trained to do.

Purpose and role in modern AI

MCP helps AI models go beyond their static training data. It gives large language models (LLMs) and other AI systems a way to connect with outside information smoothly.

For example, with MCP, an AI chatbot can pull in real-time weather data or interact with calendars and other digital tools. This protocol bridges the gap between what a model knows and what it needs to know for real-world tasks.

Developers don’t have to build custom integrations for every new tool or data source anymore. MCP keeps these connections reliable and repeatable, so AI models can stay up to date.

It also supports new AI use cases, like task automation and live data retrieval, which just weren’t possible before.

Overview of protocol design

Model Context Protocol works a lot like a USB-C connector, but for AI systems. It gives AI models a unified way to talk to different tools, databases, and APIs.

MCP is open and standardised, so it can work across many platforms and providers. The protocol sets clear rules for how AI models and software exchange data—how requests are made, which formats are allowed, and how responses are handled.

It also covers how tools authenticate and how errors get managed between systems. MCP doesn’t tie itself to any one AI vendor, which means it aims for broad compatibility. This approach leads to faster development cycles and more reliable systems.

Key concepts and terminology

To get a grip on MCP, here are some main terms you’ll run into:

- Context: The extra information or data an AI model uses for a specific task.

- Connector: The software layer that links an AI model with an external tool or database using MCP.

- Request: The message sent from the AI model or agent to ask for information or trigger a task externally.

- Response: The information or result returned by the external tool back to the AI model.

- Standardisation: Making sure that tools and models communicate in the same way.

MCP’s main idea is to keep these exchanges simple, secure, and repeatable. Using these terms and methods, AI systems can better understand and use live data, which really boosts their usefulness in everyday tasks.

If you want a detailed breakdown of how MCP standardises AI connections, Cloudflare’s explanation of MCP is worth a look.

Architecture of MCP-based AI systems

The Model Context Protocol (MCP) shapes how AI systems connect, interact with data, and get things done. It follows set patterns and uses specific roles to keep systems open, flexible, and scalable.

Client-server architecture

MCP uses a client-server architecture that lets different programs work together. The core parts are the MCP host, MCP clients, and MCP servers.

The host, which might be an AI assistant or another tool, sends requests for data or functions it needs. MCP clients act as go-betweens, forwarding requests from the host to one or more MCP servers.

Each server exposes certain capabilities, like file access, code execution, or custom data sources. This split helps avoid bottlenecks and keeps tasks organised.

Most MCP-based systems use a 1:1 connection model between MCP clients and servers, which keeps communication clear and direct. The design makes it easy to scale by adding more servers as needed.

AI assistants can connect to all sorts of data sources without big changes. If you want more details, check out the client-server model in MCP.

Session-based workflow

MCP uses sessions, called MCPSessions, to manage and track activities. A session logs requests, data, and responses between the MCP host, client, and server during a specific task.

This setup keeps tasks organised and easy to repeat. Sessions make it possible to pause and resume tasks without losing information.

For example, when an AI assistant handles a multi-step job, it can store the session and pick up where it left off. Sessions also help with debugging or auditing, since every action is saved in context.

Some frameworks, like MCPJava SDK, use this session-based workflow to manage long-running jobs or complex queries. The session links all parts of an interaction, so users and developers can see how data and actions move through the system.

This structure comes in handy for multi-agent AI systems handling shared tasks and workloads.

Core components and operations

Model Context Protocol (MCP) works through a set of defined components and communication methods. These let AI models interact smoothly with other tools and manage tasks by agreeing on features and using reliable ways to exchange information.

MCP clients and servers

MCP uses a clear client-server setup. An MCP client can be any program or tool that wants to interact with an AI model or service—think desktop AI apps, plugins, or IDEs.

The client sends requests and gets responses as it works. The MCP server is the program or service that fulfills these requests. Each server might offer features like document summarisation, code completion, or data analysis.

Servers need to be lightweight and modular so they can run on different systems and scale up easily. They keep track of which features they provide, who’s connected, and which sessions are active.

This split between client and server makes things flexible and secure. Their relationship is always one-to-one, but a host application can connect to several servers at once, making the architecture highly modular and scalable. For more on this setup, check out this overview of the MCP architecture.

Message transport mechanisms

Message transport is how data moves between MCP clients and servers. The protocol supports different transport options, so it can work in a bunch of situations.

Common message transports include stdio-based communication, which uses standard input and output streams, or network-based options like HTTP or HTTP streaming. Java HTTPClient and HTTP streaming offer fast, low-latency, two-way communication, which is handy for operations needing immediate feedback.

Some use cases need two-way connections for both synchronous and asynchronous operations, so it’s important for the protocol to support multiple transport types. The transport choice affects performance, reliability, and whether MCP can run over local or remote networks.

This flexibility is a big part of what makes MCP adaptable across different development and production environments. If you want a deeper dive into transport methods, here’s an in-depth MCP guide.

Capability and version negotiation

Before a client and server start working together, they have to agree on what features and protocol versions they both support. This process, called capability negotiation and protocol version negotiation, keeps things compatible and cuts down on errors from unsupported actions.

During the initial handshake, the server lists its capabilities—like available AI tasks or supported data formats. The client then picks which functions to use.

This setup also makes upgrades easy. New capabilities can be added as servers and clients evolve, and both sides know which versions are in play.

Verifying abilities and protocol versions up front is key for secure, efficient operation. MCP avoids incompatibility headaches and keeps workflows smooth for all connected parts. More about capability management is in this explanation of MCP’s core components.

Integration With AI tools and resources

Model Context Protocol (MCP) lets AI models interact directly with external tools and information sources. It handles how these tools are discovered and made available, and how AI agents manage and access different resources during tasks.

Tool discovery and exposure

MCP sets a standard for how AI systems find and use different tools. Say an AI assistant needs live info—it can tap APIs, search files, or check databases with the same formats every time.

This approach lets the model work with business tools, content libraries, and dev platforms without a bunch of custom setup. Pretty handy if you ask me.

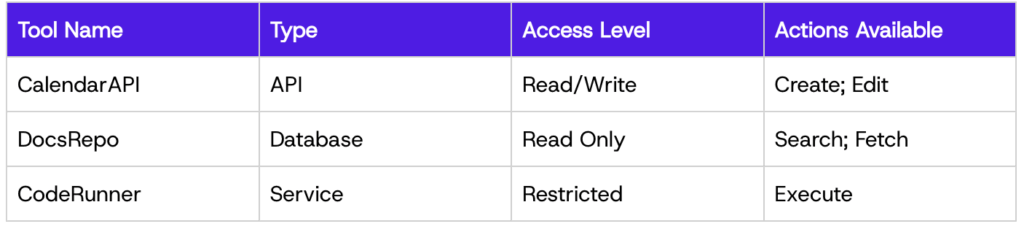

Tool discovery just means figuring out which resources an AI model can use in each situation. MCP spells out how agents see what’s available.

The system shares a list of compatible resources, with details like tool type, access level, and supported actions. That way, the AI can quickly spot the best tool for the job.

Here’s a simple table to show how tools can be organised:

With MCP, you can add or remove tools quickly and safely. It’s a flexible way to keep up with fast-changing tech, making AI more context-aware and ready for complex requests (learn more).

Agent and resource management

Agents handle tasks by talking to tools and resources. MCP gives them a consistent way to manage access, so they only use what they’re allowed.

When an agent needs data, it checks permissions and uses a standard protocol to request or change info. No more wild guesses.

MCP also keeps agents updated in real time about which resources are available or restricted. If a tool goes offline or access changes, the agent hears about it right away.

This helps the AI system avoid errors and adjust on the fly. It’s a relief when things just work.

MCP sets out simple rules for managing sessions, tracking which resources each agent uses and for how long. That boosts security and helps avoid clashes when several agents work at once.

Agents can safely balance tasks like pulling live docs and updating calendars or databases—even across multiple services (more details).

Use cases in AI applications

Model Context Protocol (MCP) makes a real difference in AI applications by managing how context and info get shared. This helps AI systems feel more reliable, efficient, and easier to tweak.

Prompt engineering

Prompt engineering is all about crafting prompts so large language models (LLMs) give the right answers. MCP gives prompt engineers a clear way to deliver structured context, like previous chats, user history, and instructions, straight to the model.

With MCP, you can manage and share prompt templates across different LLMs and tools. Developers get more control over info flow, and context doesn’t get lost—even in multi-step or tricky conversations.

Key Benefits:

- Consistent context transfer between different models

- Easy updating of prompt templates

- Reduced errors in handling user or system histories

Picture this: an MCP-enabled AI chat assistant remembers what you talked about and your preferences, even if you switch between systems or models. Read more on how MCP supports composable AI here.

Retrieval-Augmented Generation (RAG)

With Retrieval-Augmented Generation (RAG), LLMs pull facts from external databases and combine them with language generation to answer questions. MCP standardises how this extra context gets delivered to the model, which bumps up accuracy and performance.

MCP lets RAG systems fetch, update, and share relevant docs or data as conversations unfold. Models get the exact context they need, right when they need it.

Imagine an AI assistant for doctors. It uses RAG to grab the latest medical guidelines and patient records. MCP coordinates how and when this info loads into the model, making things safer and smoother. Learn how agent registries track model capabilities in MCP here.

Development and integration ecosystem

Developers can build strong AI solutions with tools like SDKs, libraries, and integration frameworks for MCP. These resources work with different languages and environments, making it easier to develop, launch, and connect AI models across platforms.

SDKs and libraries

SDKs make Model Context Protocol (MCP) more accessible for developers. The Python SDK is super popular and offers simple functions to connect AI models with tools and data sources.

It usually comes with libraries for authentication, network requests, and data formatting. For Java, the MCP Java SDK lets teams use MCP inside enterprise apps. You can plug it into existing Java projects to connect AI models to servers or cloud services.

Many projects are open-sourced on GitHub, so developers can check out or improve the code. Some SDKs support Docker, making it easy to run apps in containers for more consistent deployment.

Spring boot and spring AI integration

Spring Boot is a go-to Java framework for web services and microservices. Integration with MCP has come a long way, thanks to Spring Boot Starters.

These modules can act as client or server starters, letting apps connect to or expose MCP endpoints. Spring AI MCP makes it much easier to add natural language AI features to Spring apps.

By combining Spring Boot and MCP, you can quickly hook up AI assistants to business tools or content repositories. Integration often relies on Docker containers for testing and deployment, and lots of reference projects live on GitHub to help teams get started. This setup helps teams build modern, context-aware AI solutions with trusted frameworks and familiar workflows.

Security and limitations

Security and limitations are big concerns with Model Context Protocol (MCP). Protecting against unauthorised access, reliable authentication, and adapting to changes are real challenges organisations have to face.

Authentication approaches

MCP supports different ways to authenticate, but right now, most folks use API keys. They’re simple and easy to plug in, but honestly, they don’t meet enterprise-grade security needs.

Bigger organisations want advanced authentication like OAuth, token-based systems, or tying into their existing identity providers. These methods help enforce user access, offer better tracking, and stop privilege creep.

The protocol will need to evolve to better support these tougher authentication approaches, especially as it rolls out to places with strict security controls.

Right now, the lack of strong enterprise-grade authentication worries companies handling sensitive or regulated data. Weak identity management means a higher risk of data leaks or misuse, and that risk only grows as things scale. For more on these issues, check out this overview on security with the Model Context Protocol.

Current limitations and future directions

MCP tackles the technical challenge of connecting lots of AI models to tools, but it still falls short on data governance and security compliance. Many regulated industries find MCP lacking in features needed to meet their standards.

Limitations include:

- Not enough support for advanced authentication methods

- Potential for breaking changes in the API as MCP evolves

- Gaps in monitoring and auditing

- Risk of data exposure in critical infrastructure

As MCP develops, the roadmap includes beefing up security and handling breaking changes more carefully. Teams working on MCP want to add better authentication standards and stronger controls for enterprise users. You’ll find more details in pieces covering MCP’s key limitations and security risks.

Real-world examples and future outlook

Real-world uses of Model Context Protocol (MCP) show how AI models are getting better at sharing info and working together. There’s a real push to set standards and build tools that help these systems connect and communicate.

Leading implementations

MCP improves how AI models, tools, and external data sources talk to each other. Anthropic has made its Claude models more flexible with context sharing and agent-to-agent (A2A) communication.

When they released Claude Desktop, it showed how MCP can let local tools chat with cloud-based models. FastMCP offers speedy context passing between services, handling structured data, concurrent client connections, and streaming updates with server-sent events.

In a lot of workplaces, Slack bots use local MCP servers to pull context from remote servers and databases, including SQL. This keeps workflows moving and makes shared updates easy to see.

Features like structured logging and context sources help trace what data was used and where it came from. That’s a real bonus for users and developers trying to keep things clear.

Emerging standards and community efforts

New standards are coming together to make MCP easier to use and roll out across platforms. Open communities are working on shared schemas for context packets, ways to handle structured data, and protocols for real-time messaging like server-sent events and WebFlux.

Community-driven projects are refining how MCP manages structured logging and concurrent client connections. The goal is to let multiple tools and models collaborate at once.

There’s a push for more secure and flexible agent-to-agent (A2A) communication. By connecting local and remote servers under one standard, these efforts aim to make it simple for AI tools to work together at scale.

Open-source projects are even building MCP adapters to link data from different sources, so context stays clear and up to date at every step. For more about applications and what’s happening now, see Model Context Protocol explained on mljourney.com and more at Toolworthy.ai.

Wrapping up

The Model Context Protocol (MCP) is essential for the future of AI, enabling more flexible and secure interactions between AI models and external resources. By standardising communication, MCP simplifies AI development, enhances the capabilities of applications like prompt engineering and RAG, and supports a dynamic ecosystem of tools and integrations. While challenges in advanced authentication and data governance remain, ongoing community efforts and emerging standards continuously improve MCP’s robustness and applicability across diverse AI landscapes. As AI continues to evolve, MCP’s role as a universal connector will be crucial in building intelligent systems that are truly adaptable, reliable, and scalable.

Frequently asked questions

Model Context Protocol (MCP) standardises how AI models connect to outside data and systems. MCP keeps communication clear, controlled, and consistent in AI environments.

MCP is an open protocol that lets large language models (LLMs) get data and context from outside sources reliably. It acts as a shared language for different AI systems, making it easier to plug in new tools or information streams.

Developers use MCP to simplify building and updating AI apps. Integrating external data becomes more secure and efficient, so there’s less need for custom fixes every time something new comes in.

This means fewer errors and less manual work, letting teams scale projects faster.

MCP matters because it standardizes how AI apps talk to external data sources. Developers get a reliable way to handle input and output, which makes these systems sturdier and more adaptable.

This setup helps AI adjust to new data and tools without forcing everyone to rewrite a bunch of code.

MCP acts a bit like a universal connector—think USB-C for data. It sets the rules for sharing, requesting, and processing info between AI models and all sorts of sources.

With that kind of consistency, adding new features to AI systems gets way easier. They can pull in fresh, relevant info whenever they need it, instead of getting stuck with outdated data.

The main parts of MCP are context handlers, standard message formatting, and a framework for authorising and controlling data access. These pieces help information move in a predictable and secure way.

MCP lets developers hook AI up to APIs, databases, and other tools using a single, unified protocol. If you want a deeper dive, check out this developer’s guide.

Anthropic introduced MCP in late 2024 to improve how AI apps interact with data and tools. Since then, lots of folks in the AI world have picked it up as a standard way to connect systems and resources.

Read more about the origin of MCP at this complete guide.

Table of contents